相关地址信息

Prometheus github地址:https://github.com/coreos/kube-prometheus

组件说明

- MetricServer: 是kubernetes集群资源使用情况的聚合器,收集数据给kubernetes集群内使用,如kubectl、hpa、scheduler等。

- PrometheusOperator: 是一个系统监测和警报工具箱,用来存储监控数据。

- NodeExporter: 用于各node的关键度量指标状态数据。

- KubeStateMetrics: 收集kubernetes集群内资源对象数据,指定告警规则。

- Prometheus:采用pull方式收集apiserver,scheduler,controller-manager,kubelet组件数据,通过http协议传输。

- Grafana: 是可视化数据统计和监控平台。

构建记录

git clone https://github.com/coreos/kube-prometheus.git

cd /root/kube-prometheus/manifests修改 grafana-service.yaml文件,使用 nodeport 方式访问grafana:

vim grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

spec:

type: NodePort # 添加内容

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30100 # 添加内容

selector:

app: grafana

修改 prometheus-service.yaml , 改为nodePort

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort # 新增

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30200 # 新增

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP修改 alertmanager-service.yaml,改为 nodePort

vim alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30300

selector:

alertmanager: main

app: alertmanager

sessionAffinity: ClientIPHorizontal Pod Autoscaling

Horizontal Pod Autoscaling 可以根据 CPU 利用率自动伸缩一个 Replication Controller、 Deployment 或者 Replica Set 中的 Pod 数量

kubectl run php-apache --image=gcr.io/google_containers/hpa-example -- requests=cpu=200m --expose --port=80创建 HPA 控制器 相关的算法的详情请参阅这篇文档

kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10增加负载,查看负载节点数目

$ kubectl run -i --tty load-generator --image=busybox /bin/sh

$ while true; do wget -q -O- http://php-apache.default.svc.cluster.local; done资源限制 - Pod

kubernetes 对资源的限制实际上是通过cgroup 来控制的,cgroup是容器的一组用来控制内核如何运行进程的相关属性集合。针对内存、CPU和各种设备都有对应的cgroup

默认情况下,Pod运行没有CPU和内存的限额。这以为着系统中的任何Pod将能过像执行该Pod所在的节点一样,消耗足够多的CPU和内存。一般会针对某些应用的pod资源进行资源限制,这个资源限制是通过resources 的requests和limits来实现

spec:

containers:

- image: xxxx

imagePullPolicy: Always

name: auth

ports:

- containerPort: 8080

protocol: TCP

resources:

limits:

cpu: "4"

memory: 2Gi

requests:

cpu: 250m

memory: 250Mi

requests要分分配的资源,limits为最高请求的资源值。可以简单理解为初始值和最大值

资源限制 -名称空间

I、计算资源配额

apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-resources

namespace: spark-cluster

spec:

hard:

pods: "20"

requests.cpu: "20"

requests.memory: 100Gi

limits.cpu: "40"

limits.memory: 200GiII、配置对象数量配额限制

apiVersion: v1

kind: ResourceQuota

metadata:

name: object-counts

namespace: spark-cluster

spec:

hard:

configmaps: "10"

persistentvolumeclaims: "4"

replicationcontrollers: "20"

secrets: "10"

services: "10"

services.loadbalancers: "2"III、配置CPU 和 内存 LimitRange

apiVersion: v1

kind: LimitRange

metadata:

name: mem-limit-range

spec:

limits:

- default:

memory: 50Gi

cpu: 5

defaultRequest:

memory: 1Gi

cpu: 1

type: Container default即 limit 值defaultRequest即request值

# 下面开始创建prometheus

# Update the namespace and CRDs, and then wait for them to be availble before creating the remaining resources

$ cd /usr/local/install-k8s/plugin/prometheus/kube-prometheus

$ kubectl apply -f manifests/setup

$ kubectl apply -f manifests/

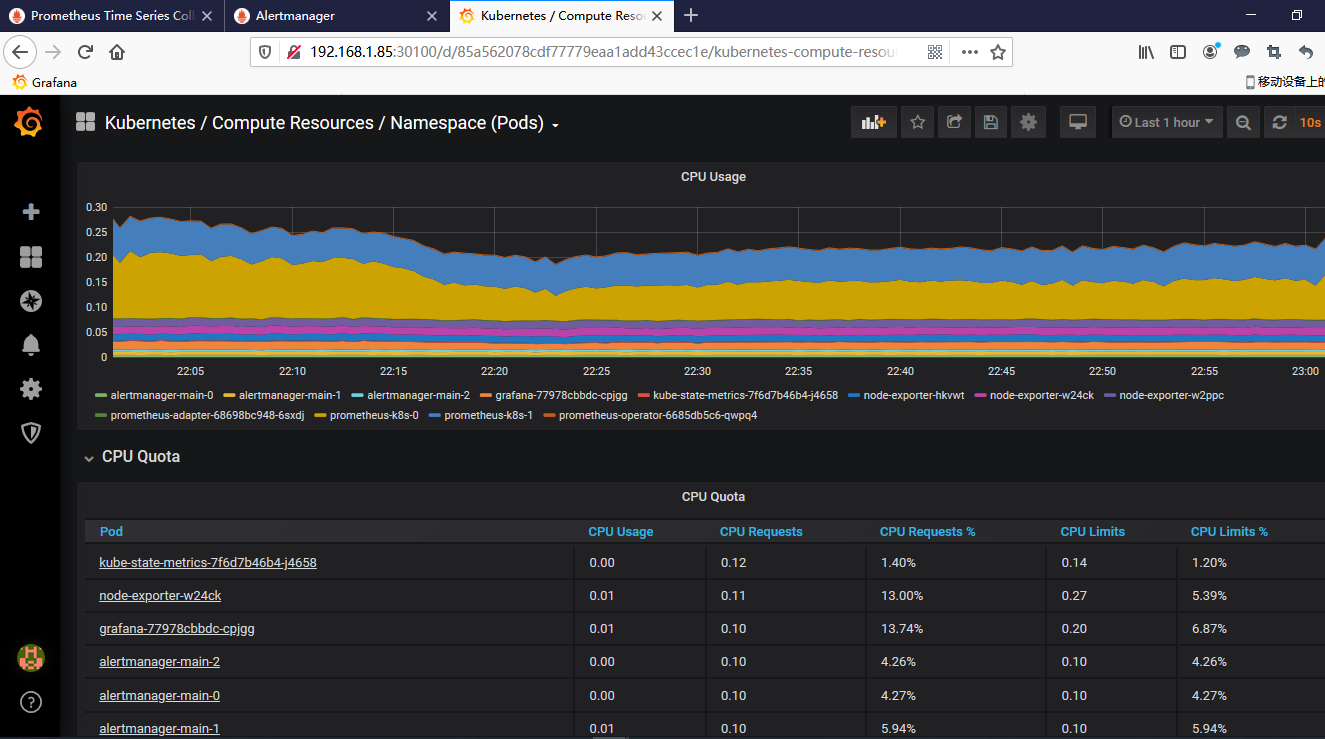

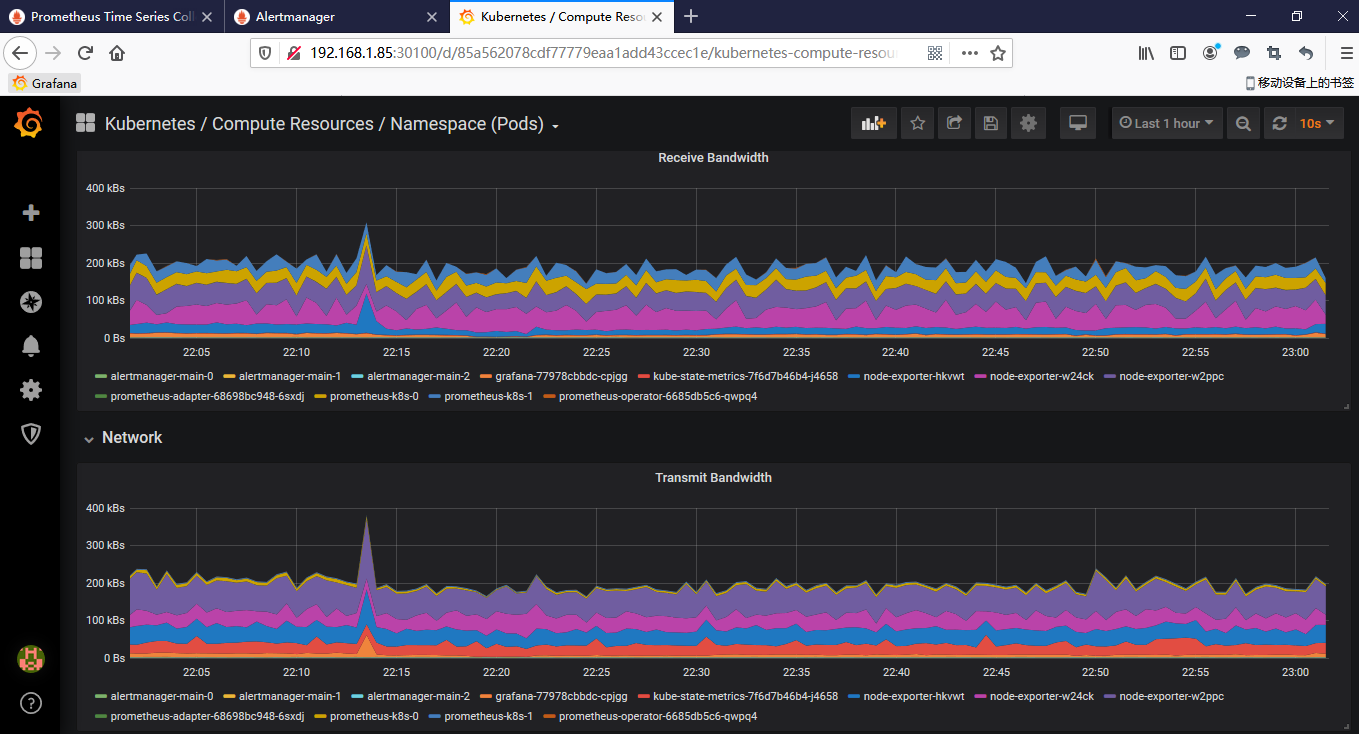

[root@k8s-master kube-prometheus]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 3m

alertmanager-main-1 2/2 Running 0 3m

alertmanager-main-2 2/2 Running 0 3m

grafana-77978cbbdc-cpjgg 1/1 Running 0 3m5s

kube-state-metrics-7f6d7b46b4-j4658 3/3 Running 0 3m6s

node-exporter-hkvwt 2/2 Running 0 3m5s

node-exporter-w24ck 2/2 Running 0 3m5s

node-exporter-w2ppc 2/2 Running 0 3m5s

prometheus-adapter-68698bc948-6sxdj 1/1 Running 0 3m5s

prometheus-k8s-0 3/3 Running 1 2m49s

prometheus-k8s-1 3/3 Running 1 2m49s

prometheus-operator-6685db5c6-qwpq4 1/1 Running 0 3m18s

[root@k8s-master kube-prometheus]# kubectl get namespaces

NAME STATUS AGE

default Active 14d

ingress-nginx Active 8d

kube-node-lease Active 14d

kube-public Active 14d

kube-system Active 14d

monitoring Active 27m

[root@k8s-master kube-prometheus]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 10.101.14.181 9093:30300/TCP 3m58s

alertmanager-operated ClusterIP None 9093/TCP,9094/TCP,9094/UDP 3m52s

grafana NodePort 10.96.206.146 3000:30100/TCP 3m58s

kube-state-metrics ClusterIP None 8443/TCP,9443/TCP 3m58s

node-exporter ClusterIP None 9100/TCP 3m58s

prometheus-adapter ClusterIP 10.106.155.187 443/TCP 3m57s

prometheus-k8s NodePort 10.104.145.175 9090:30200/TCP 3m57s

prometheus-operated ClusterIP None 9090/TCP 3m41s

prometheus-operator ClusterIP None 8080/TCP 4m11s

[root@k8s-master kube-prometheus]# kubectl get deployment -n monitoring -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

grafana 1/1 1 1 12h grafana grafana/grafana:6.4.3 app=grafana

kube-state-metrics 1/1 1 1 12h kube-rbac-proxy-main,kube-rbac-proxy-self,kube-state-metrics quay.io/coreos/kube-rbac-proxy:v0.4.1,quay.io/coreos/kube-rbac-proxy:v0.4.1,quay.io/coreos/kube-state-metrics:v1.8.0 app=kube-state-metrics

prometheus-adapter 1/1 1 1 12h prometheus-adapter quay.io/coreos/k8s-prometheus-adapter-amd64:v0.5.0 name=prometheus-adapter

prometheus-operator 1/1 1 1 12h prometheus-operator quay.io/coreos/prometheus-operator:v0.34.0 app.kubernetes.io/component=controller,app.kubernetes.io/name=prometheus-operator

[root@k8s-master kube-prometheus]# kubectl get svc -n monitoring -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

alertmanager-main NodePort 10.101.14.181 <none> 9093:30300/TCP 12h alertmanager=main,app=alertmanager

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 12h app=alertmanager

grafana NodePort 10.96.206.146 <none> 3000:30100/TCP 12h app=grafana

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 12h app=kube-state-metrics

node-exporter ClusterIP None <none> 9100/TCP 12h app=node-exporter

prometheus-adapter ClusterIP 10.106.155.187 <none> 443/TCP 12h name=prometheus-adapter

prometheus-k8s NodePort 10.104.145.175 <none> 9090:30200/TCP 12h app=prometheus,prometheus=k8s

prometheus-operated ClusterIP None <none> 9090/TCP 12h app=prometheus

prometheus-operator ClusterIP None <none> 8080/TCP 12h app.kubernetes.io/component=controller,app.kubernetes.io/name=prometheus-operator

[root@k8s-master kube-prometheus]# kubectl get rs -n monitoring -o wide

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

grafana-77978cbbdc 1 1 1 12h grafana grafana/grafana:6.4.3 app=grafana,pod-template-hash=77978cbbdc

kube-state-metrics-7f6d7b46b4 1 1 1 12h kube-rbac-proxy-main,kube-rbac-proxy-self,kube-state-metrics quay.io/coreos/kube-rbac-proxy:v0.4.1,quay.io/coreos/kube-rbac-proxy:v0.4.1,quay.io/coreos/kube-state-metrics:v1.8.0 app=kube-state-metrics,pod-template-hash=7f6d7b46b4

prometheus-adapter-68698bc948 1 1 1 12h prometheus-adapter quay.io/coreos/k8s-prometheus-adapter-amd64:v0.5.0 name=prometheus-adapter,pod-template-hash=68698bc948

prometheus-operator-6685db5c6 1 1 1 12h prometheus-operator quay.io/coreos/prometheus-operator:v0.34.0 app.kubernetes.io/component=controller,app.kubernetes.io/name=prometheus-operator,pod-template-hash=6685db5c6

[root@k8s-master kube-prometheus]# kubectl get pod -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 0 12h 10.244.3.54 k8s-node2 <none> <none>

alertmanager-main-1 2/2 Running 0 12h 10.244.1.66 k8s-node1 <none> <none>

alertmanager-main-2 2/2 Running 0 12h 10.244.3.55 k8s-node2 <none> <none>

grafana-77978cbbdc-cpjgg 1/1 Running 0 12h 10.244.3.53 k8s-node2 <none> <none>

kube-state-metrics-7f6d7b46b4-j4658 3/3 Running 0 12h 10.244.3.52 k8s-node2 <none> <none>

node-exporter-hkvwt 2/2 Running 0 12h 192.168.1.85 k8s-master <none> <none>

node-exporter-w24ck 2/2 Running 0 12h 192.168.1.38 k8s-node1 <none> <none>

node-exporter-w2ppc 2/2 Running 0 12h 192.168.1.86 k8s-node2 <none> <none>

prometheus-adapter-68698bc948-6sxdj 1/1 Running 0 12h 10.244.1.65 k8s-node1 <none> <none>

prometheus-k8s-0 3/3 Running 1 12h 10.244.3.56 k8s-node2 <none> <none>

prometheus-k8s-1 3/3 Running 1 12h 10.244.1.67 k8s-node1 <none> <none>

prometheus-operator-6685db5c6-qwpq4 1/1 Running 0 12h 10.244.1.64 k8s-node1 <none> <none>

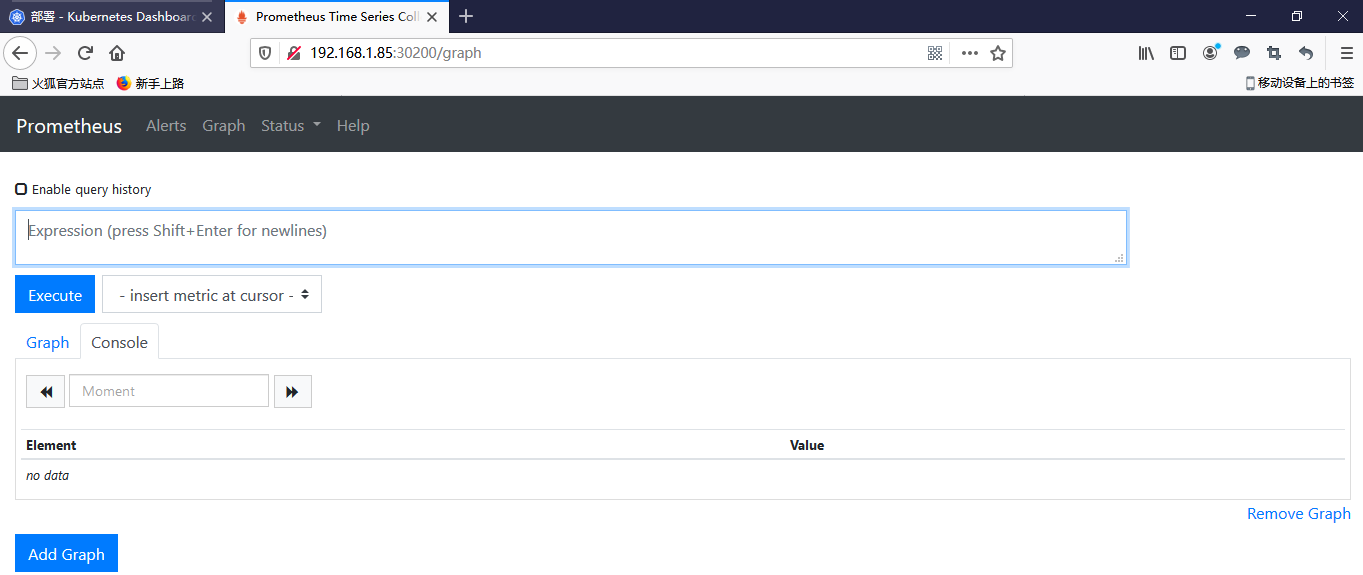

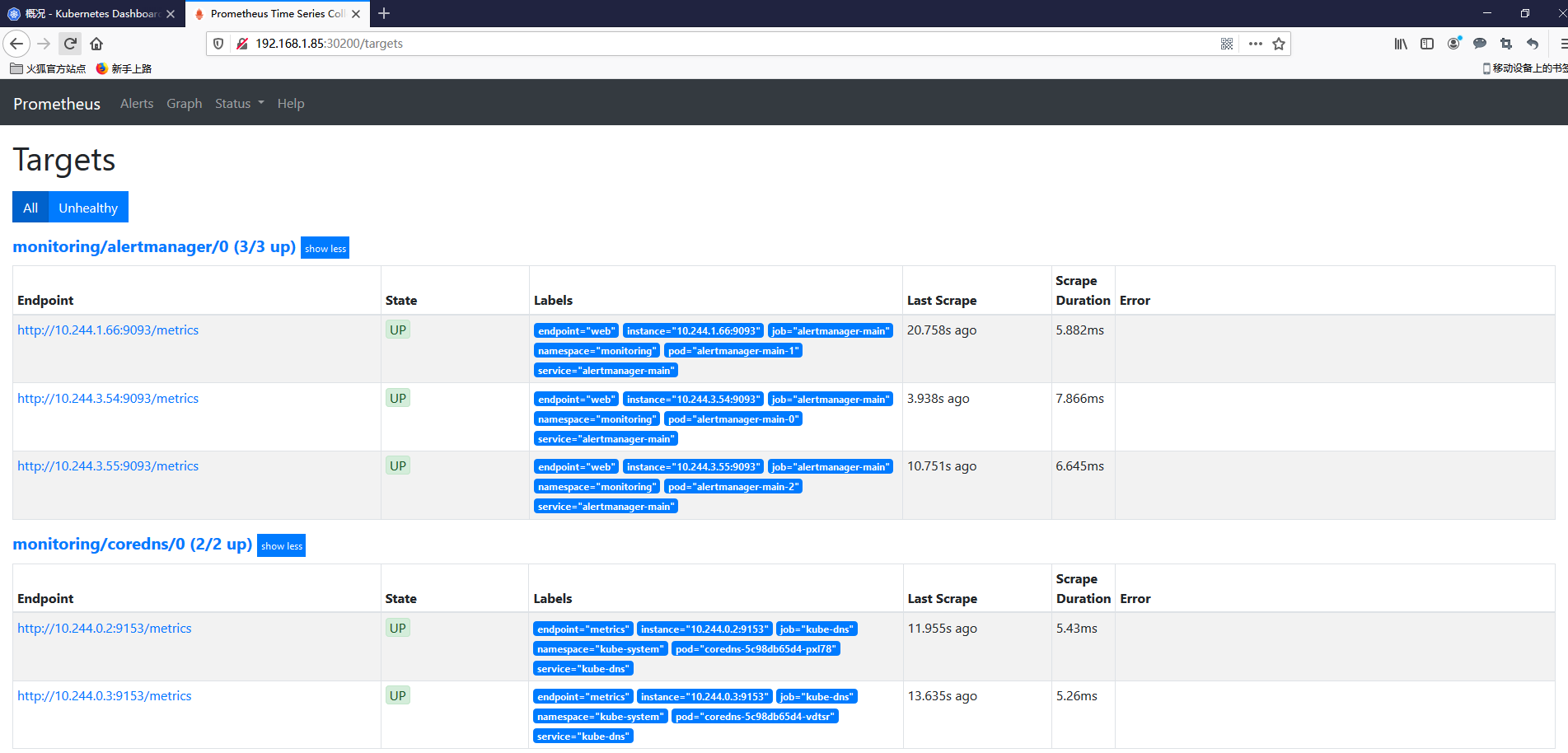

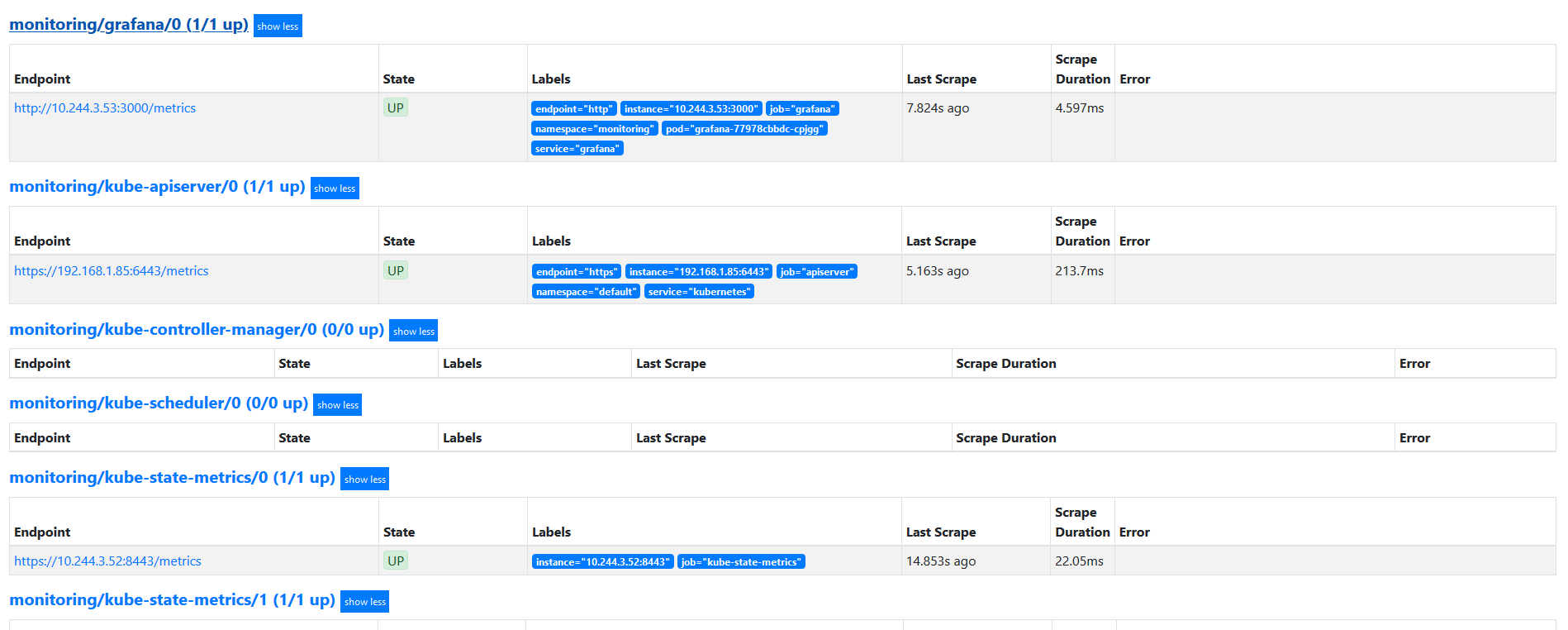

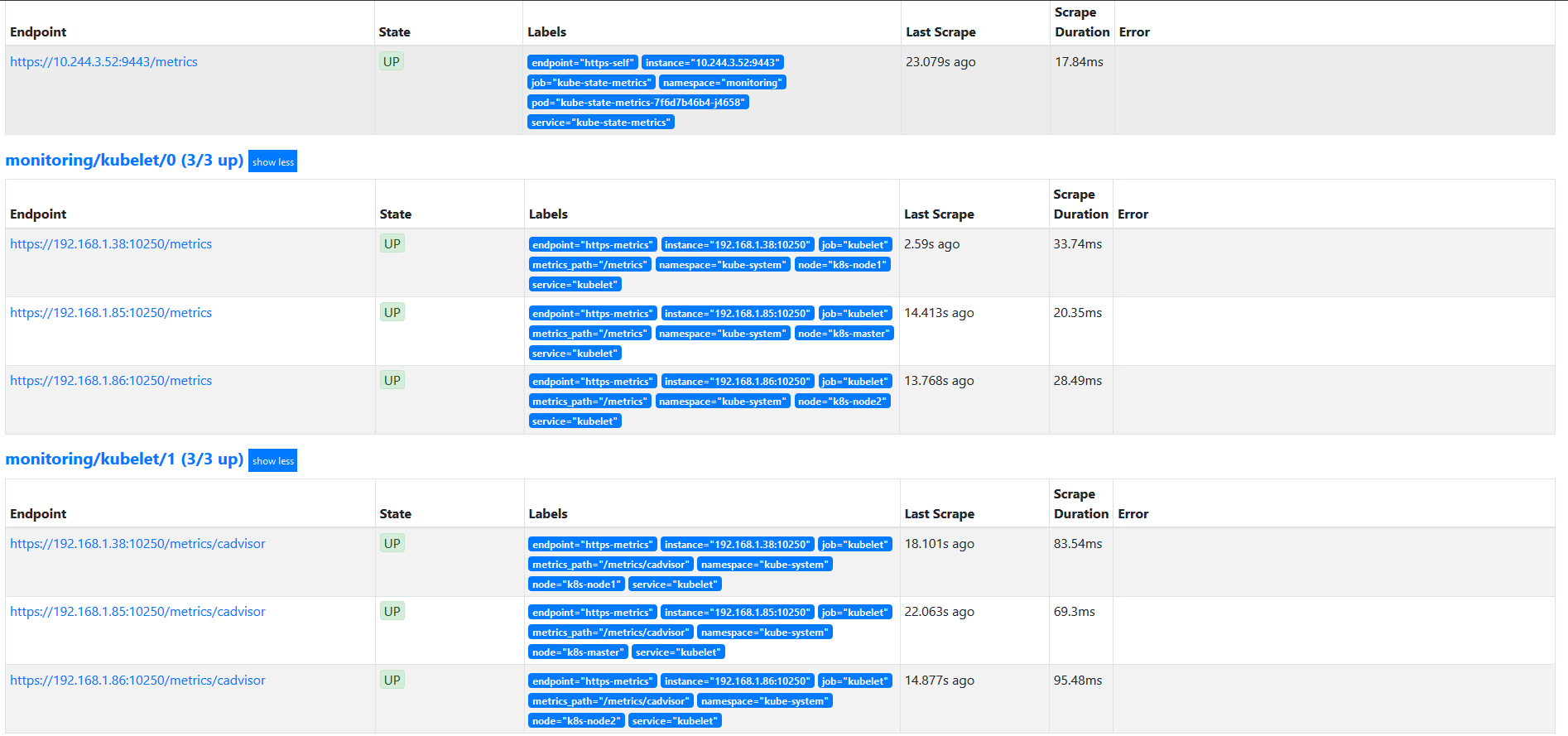

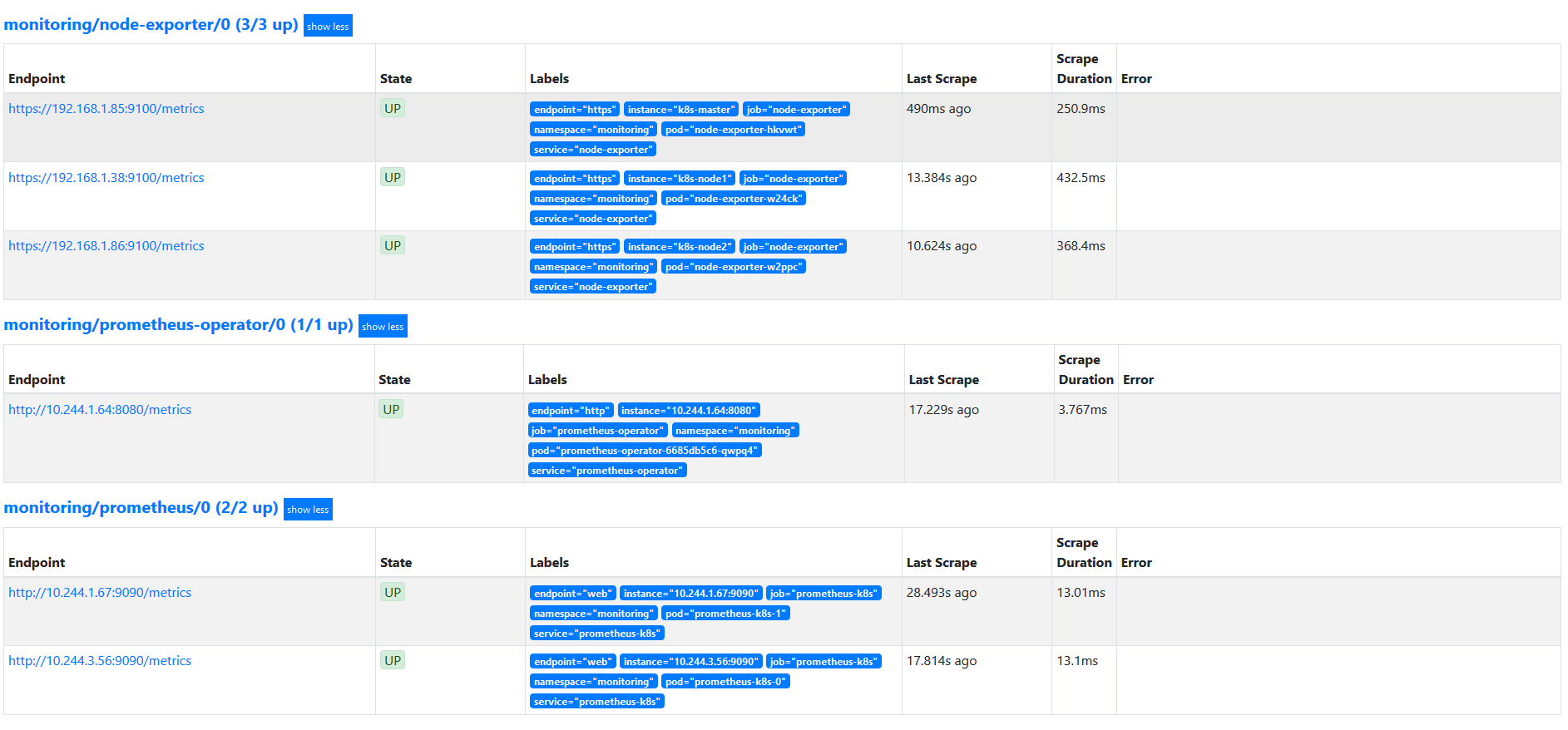

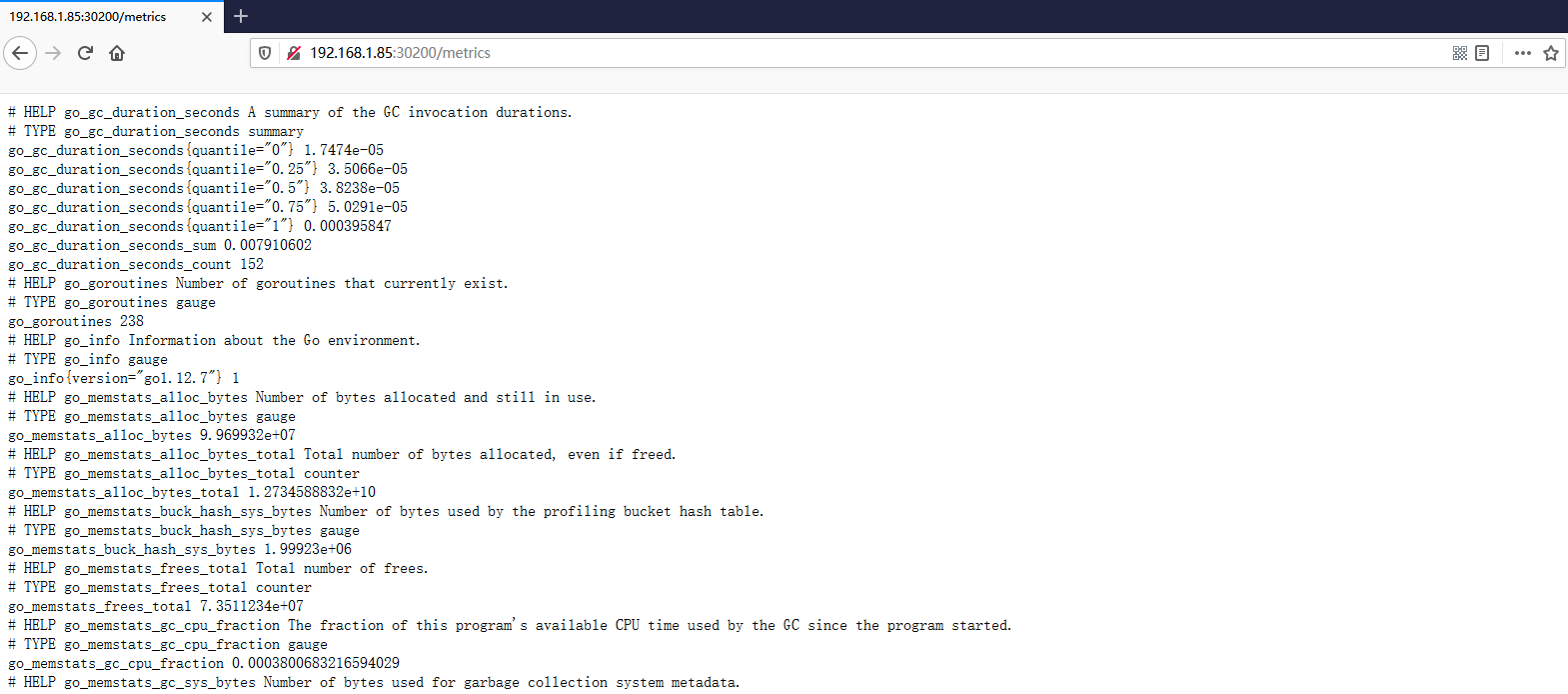

访问prometheus

prometheus 对应的nodeport端口为30200,访问: http://k8s-master:30200或者http://k8s-node1:302200或者http://k8s-node2:30200

通过访问 http://k8s-master:30200/target 可以看出prometheus 已经成功连接上 k8s 的apiserver

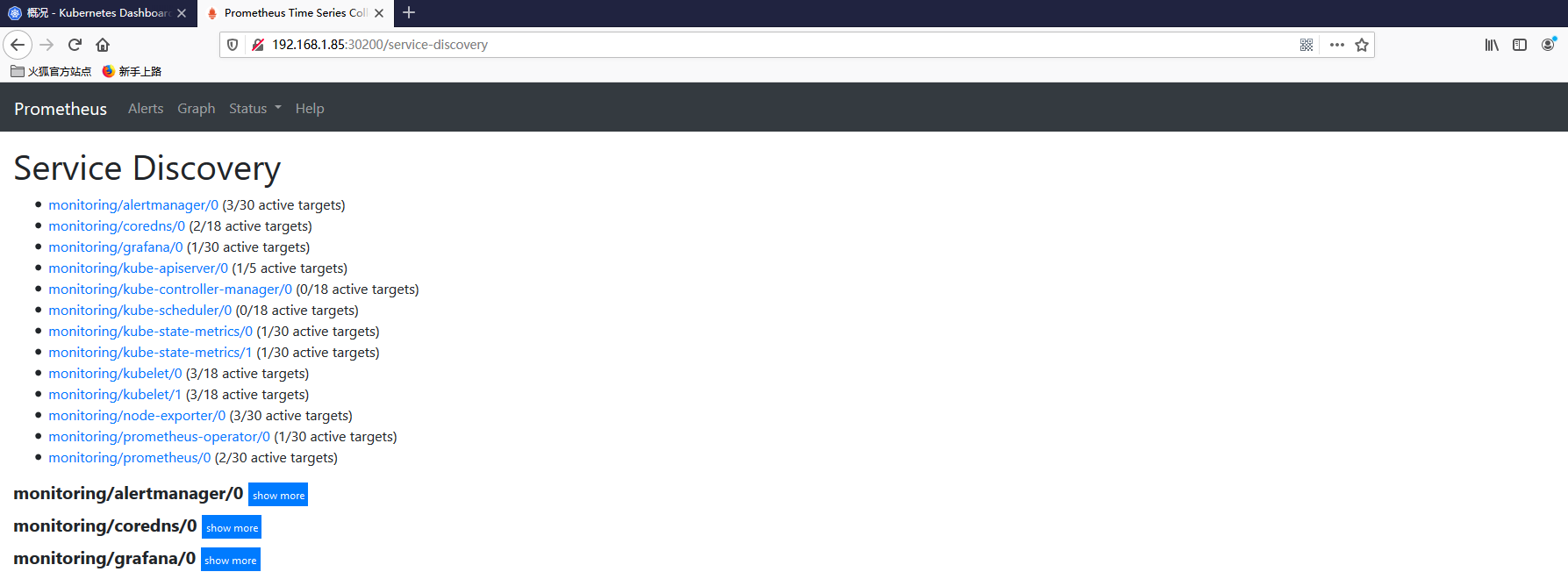

查看 service-discovery

Prometheus 自己的指标

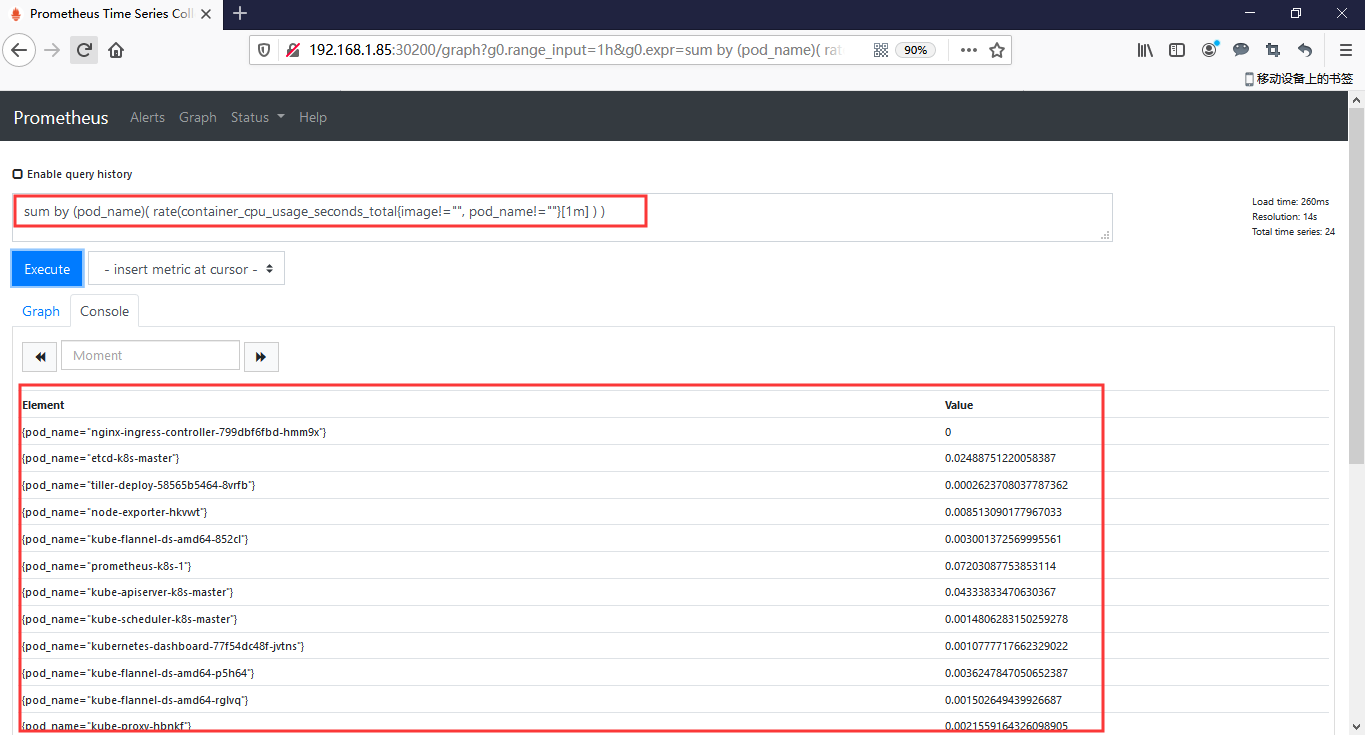

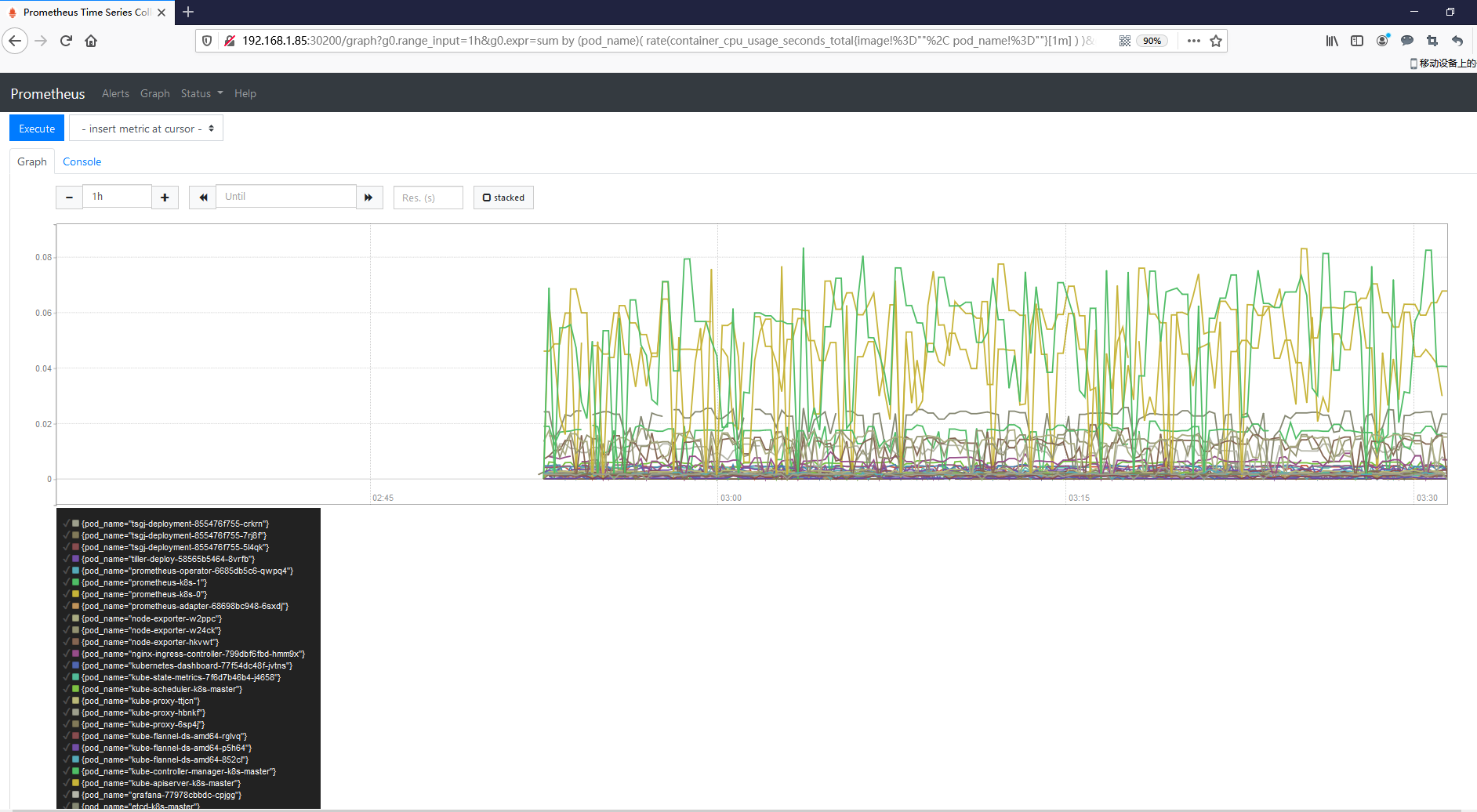

prometheus 的 WEB 界面上提供了基本的查询k8s集群中每个POD 的CPU 使用情况,查询条件如下:

sum by (pod_name)( rate(container_cpu_usage_seconds_total{image!="", pod_name!=""}[1m] ) )

上述的查询有出现数据,说明 node-exporter 往 prometheus 中写数据正常了,接下来我们就可以部署grafana 组件,实现更友好的 webui 展示数据了

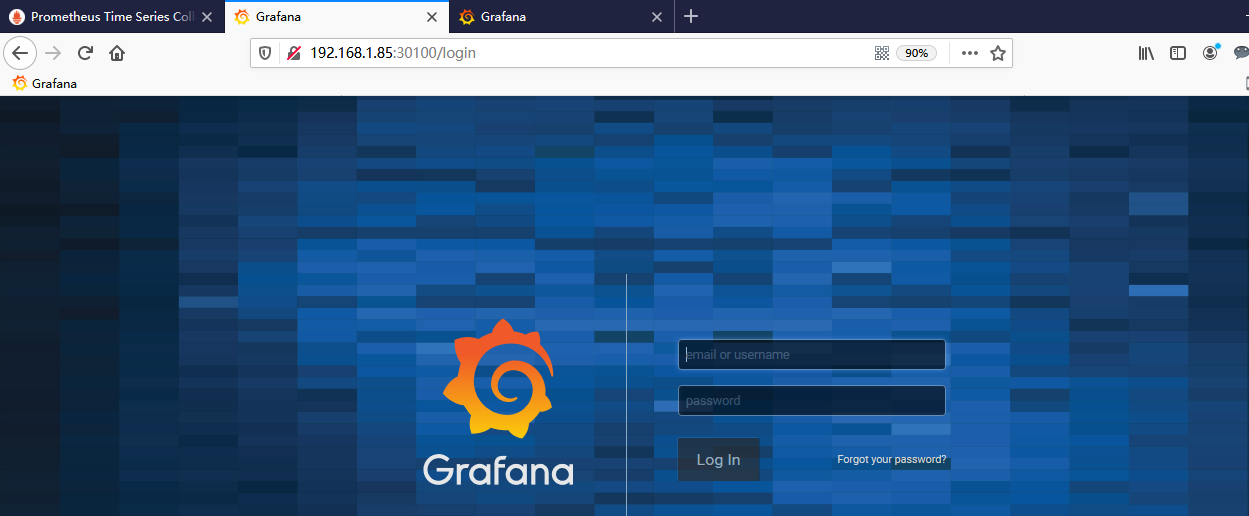

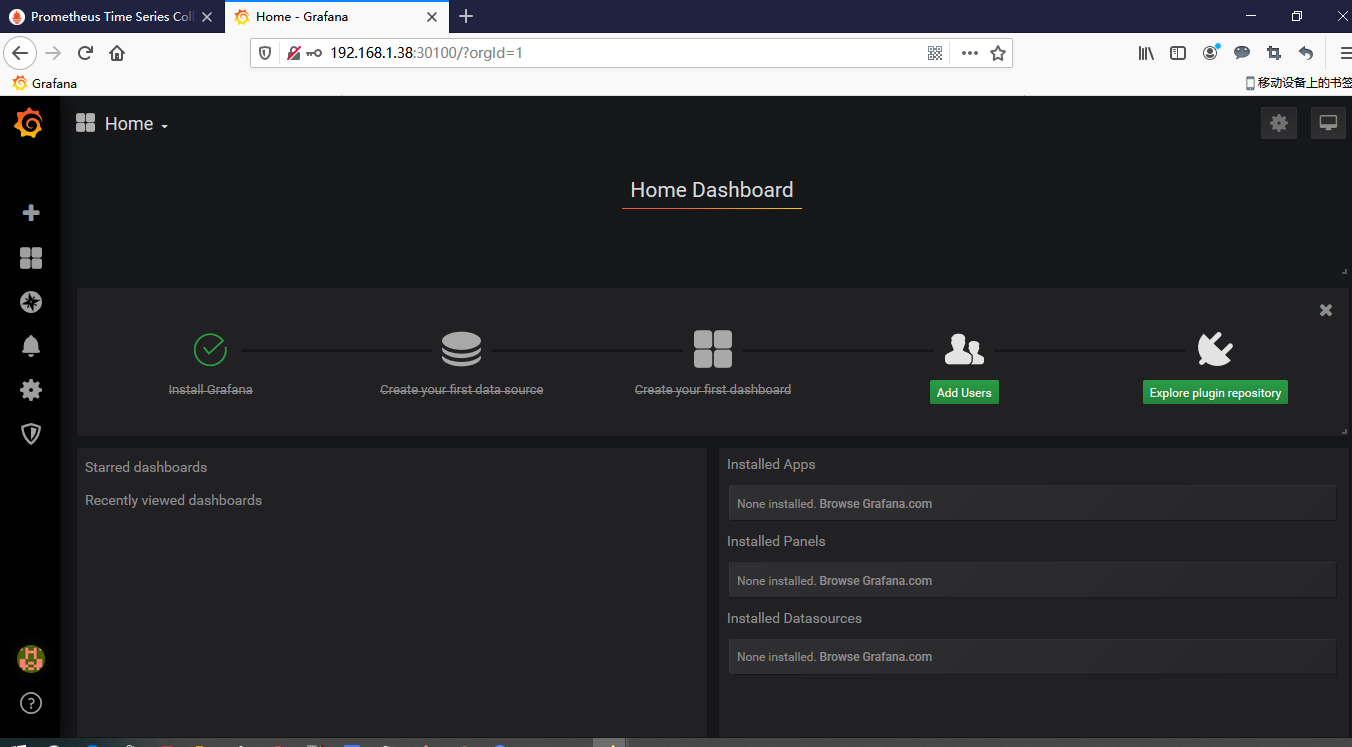

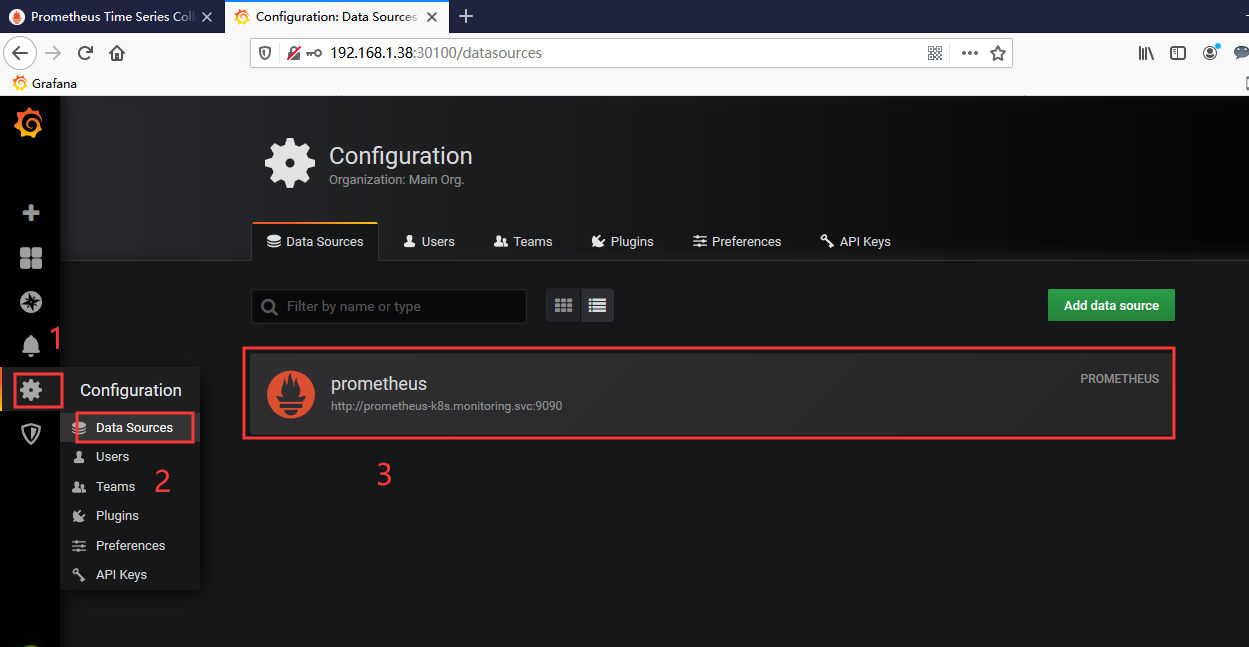

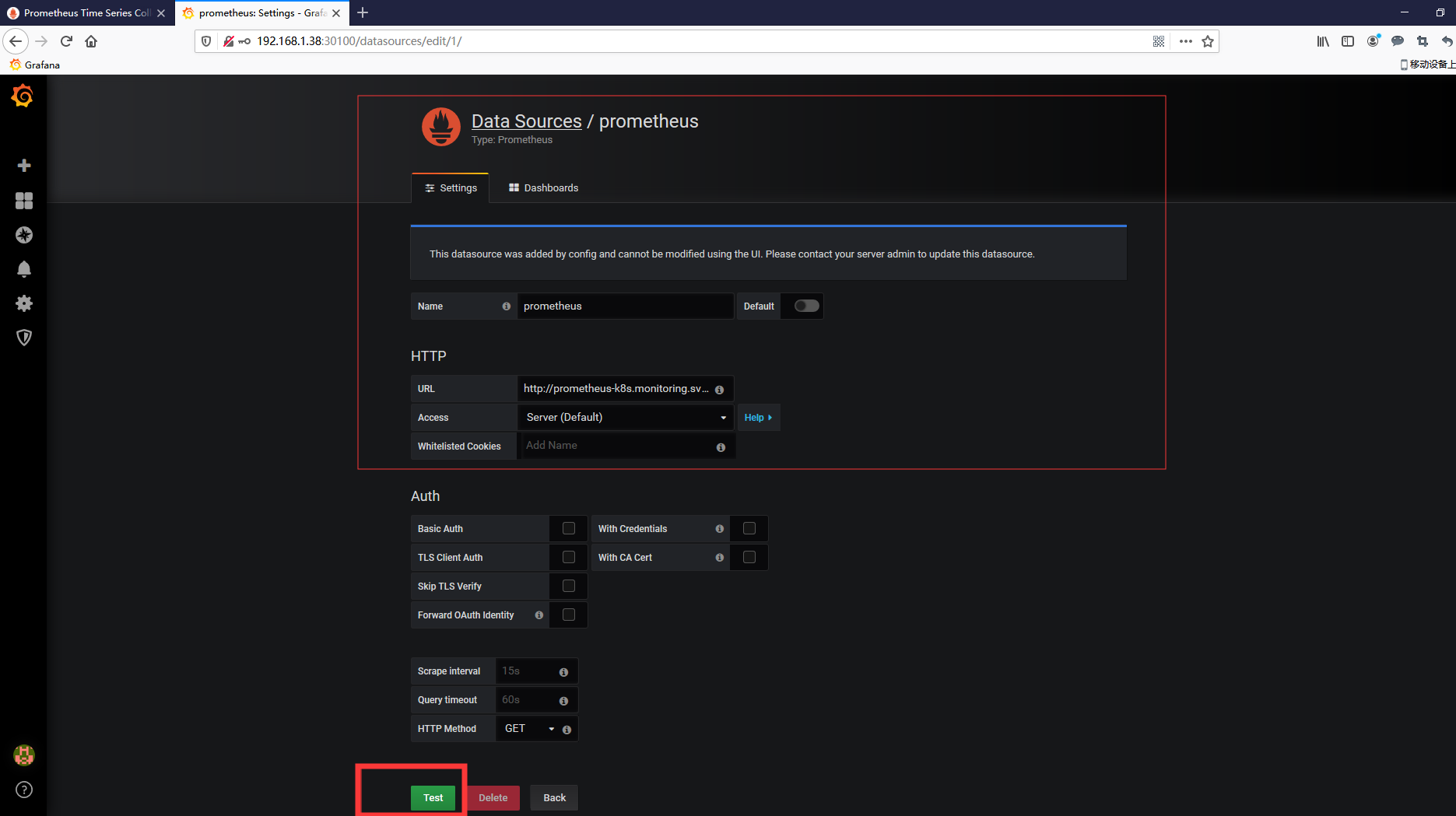

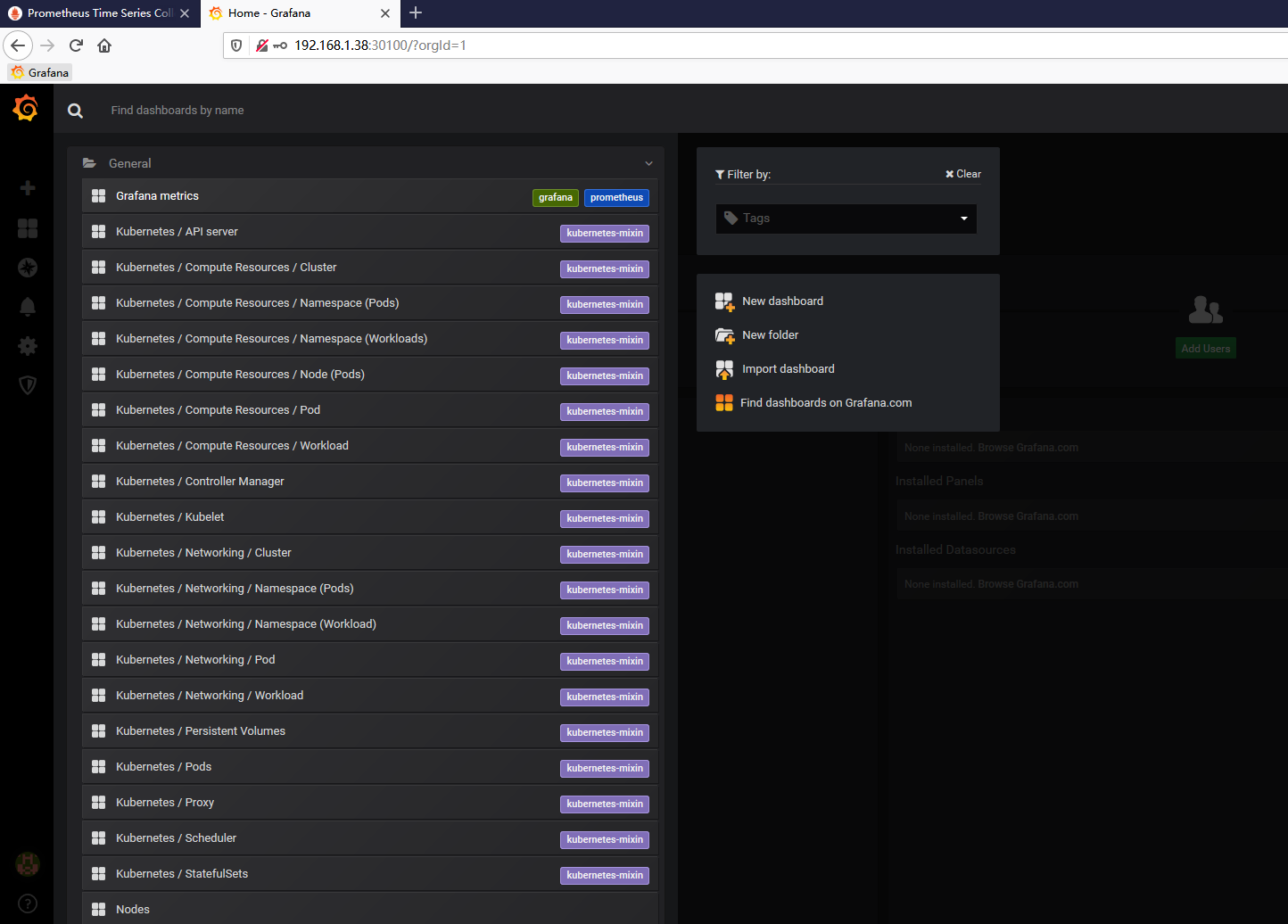

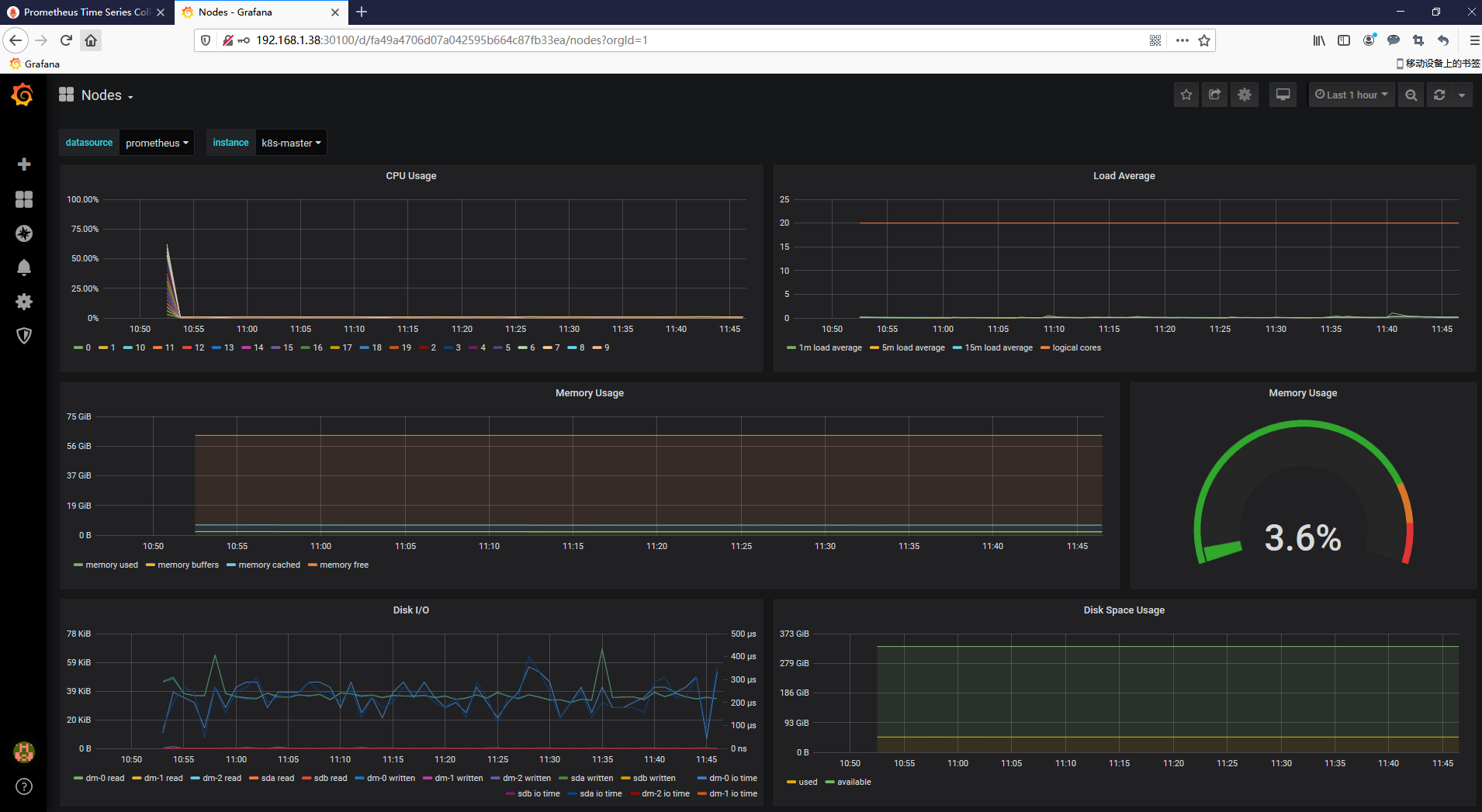

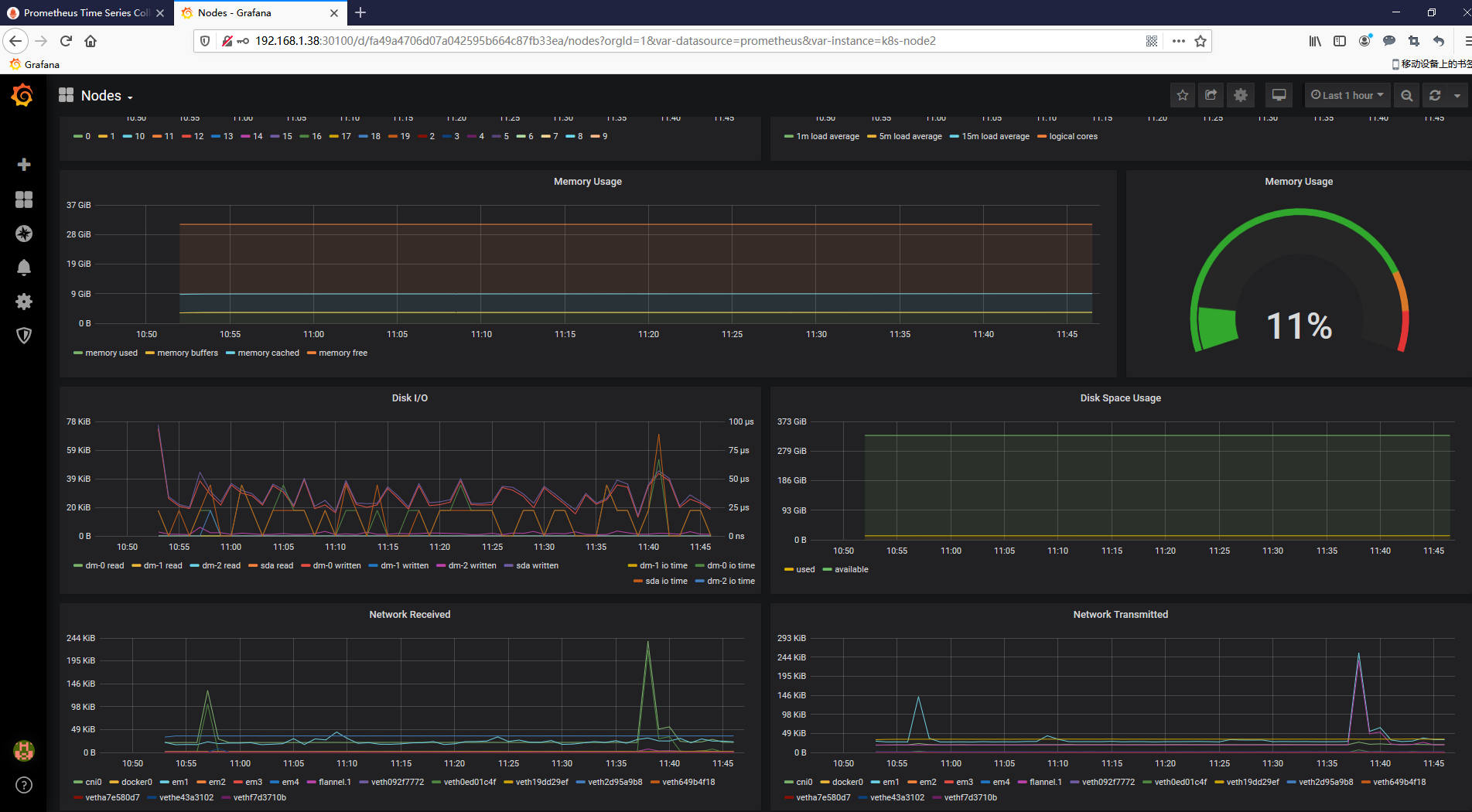

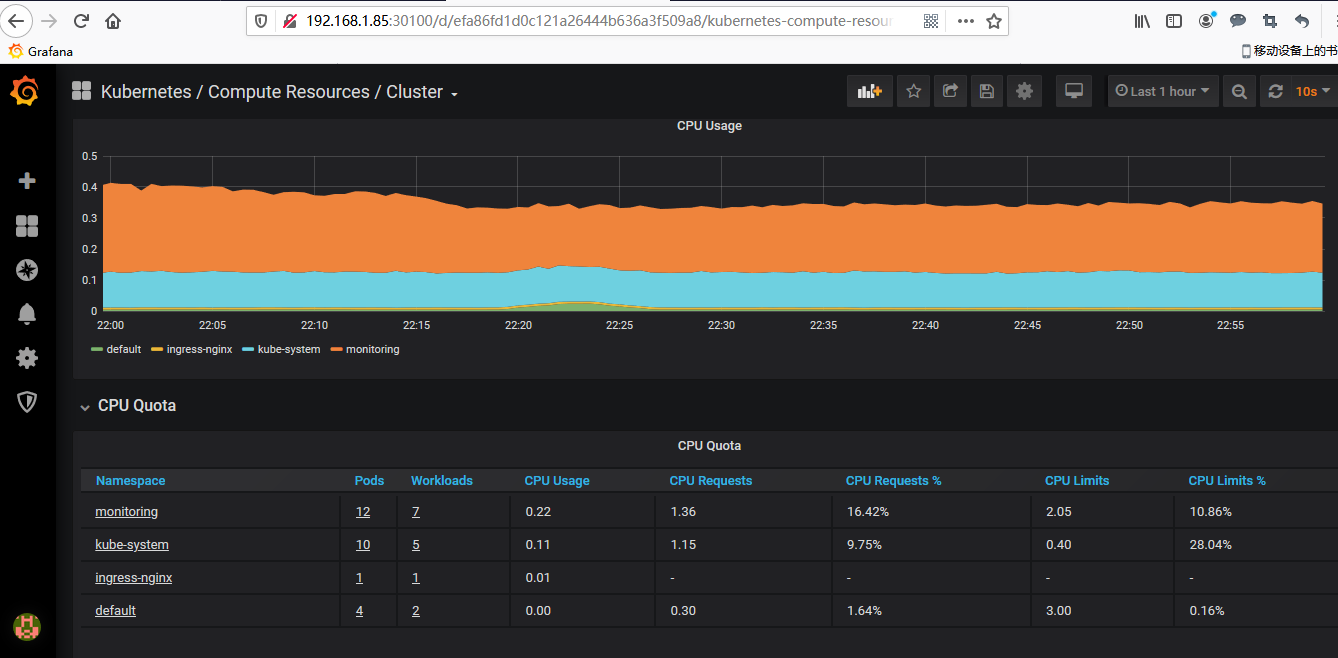

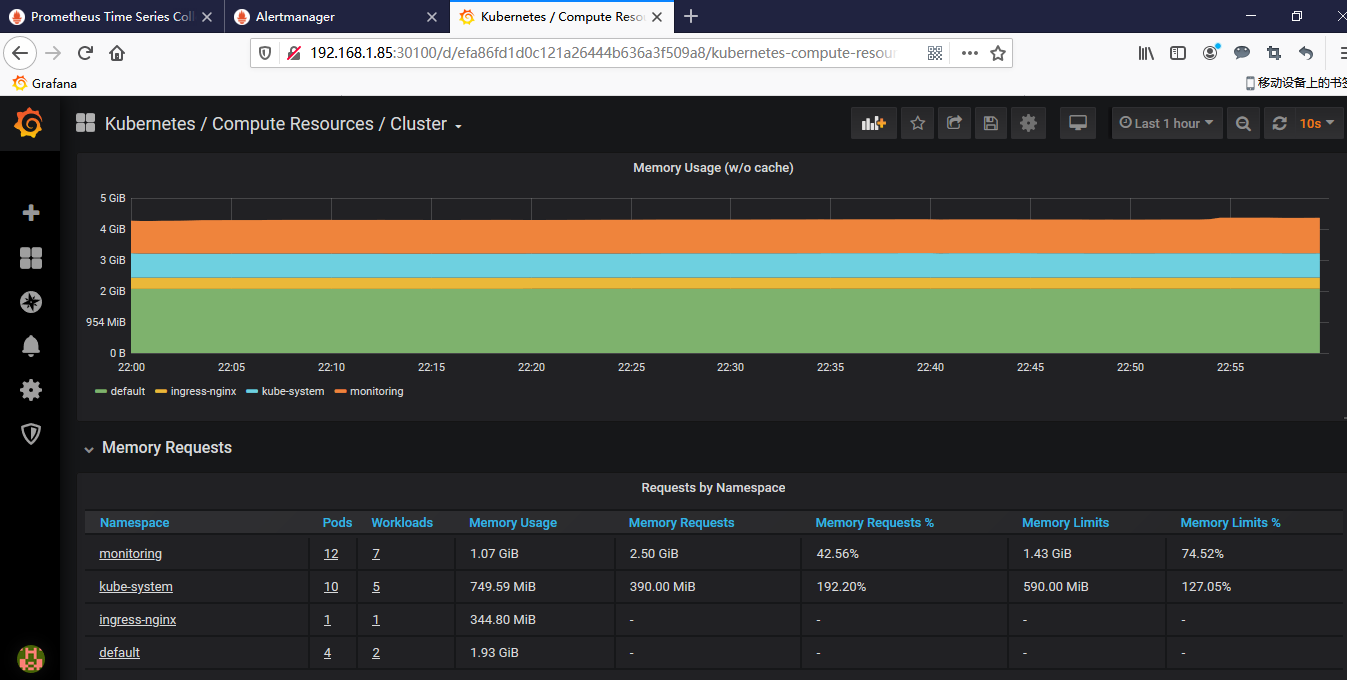

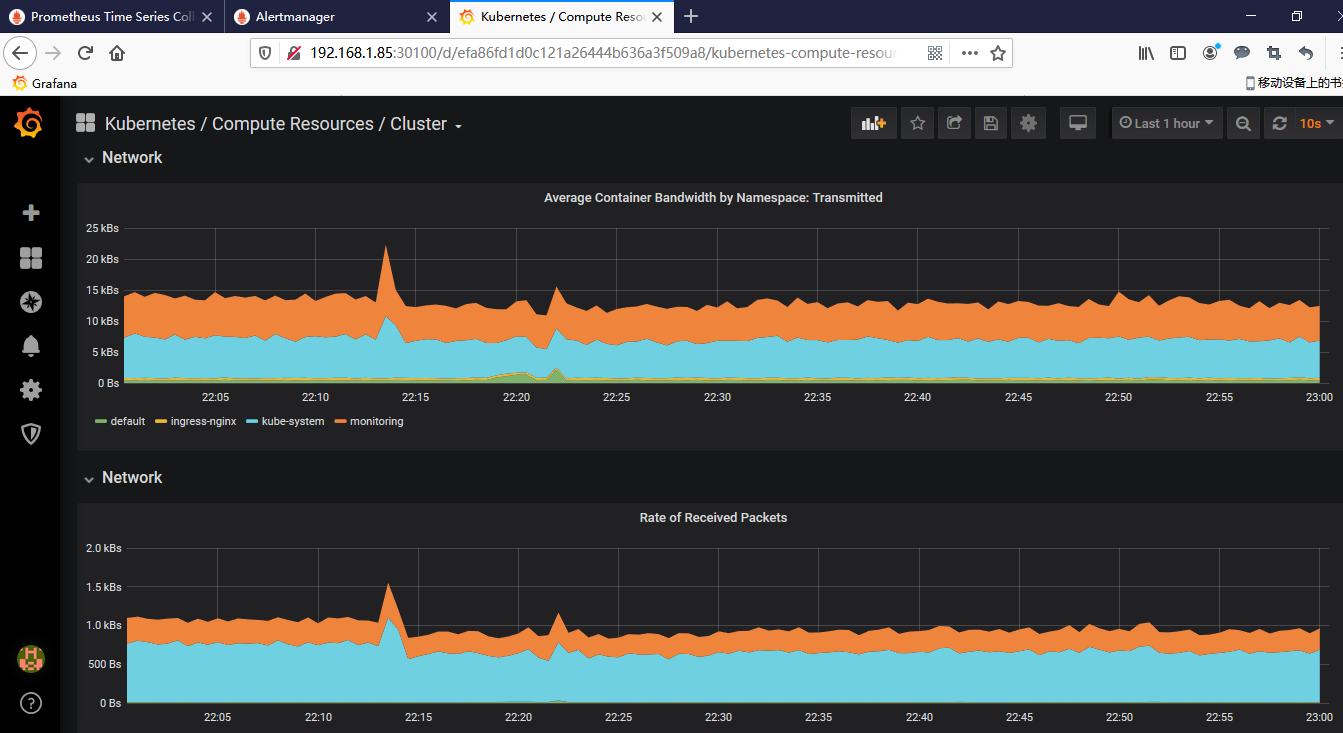

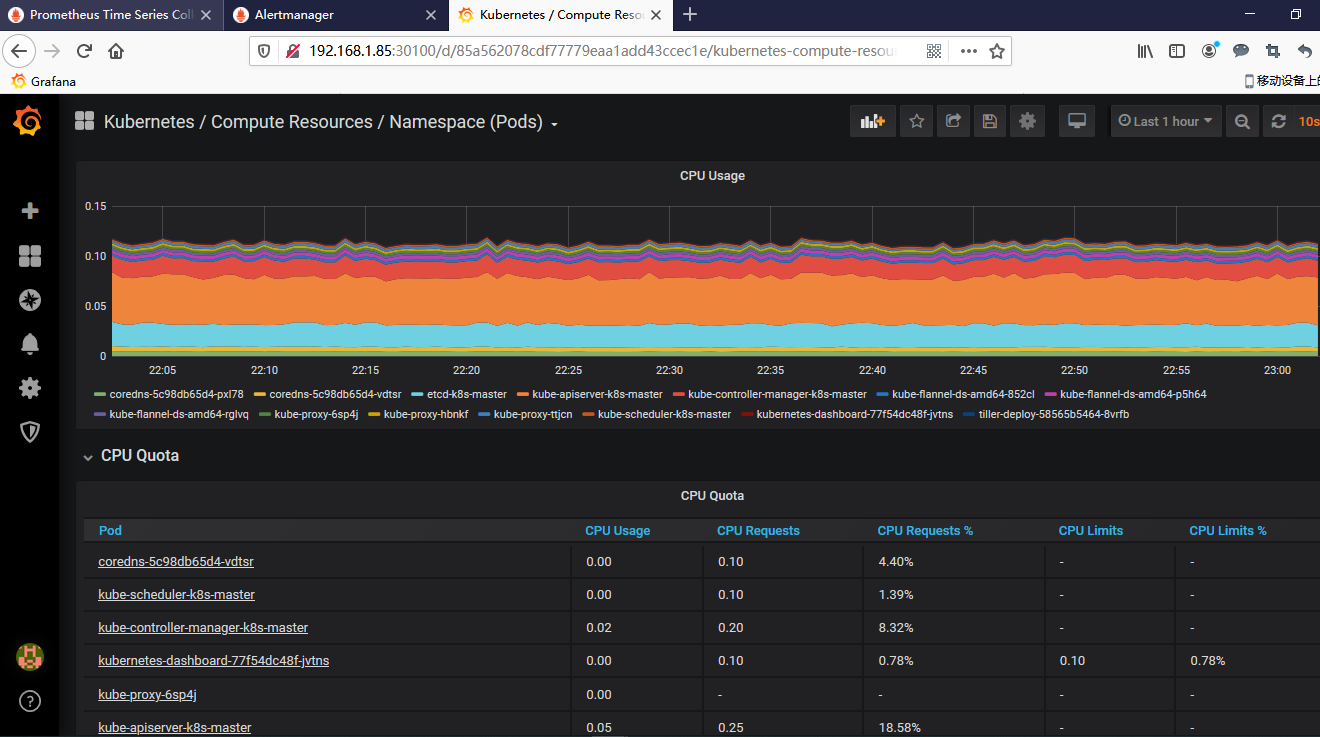

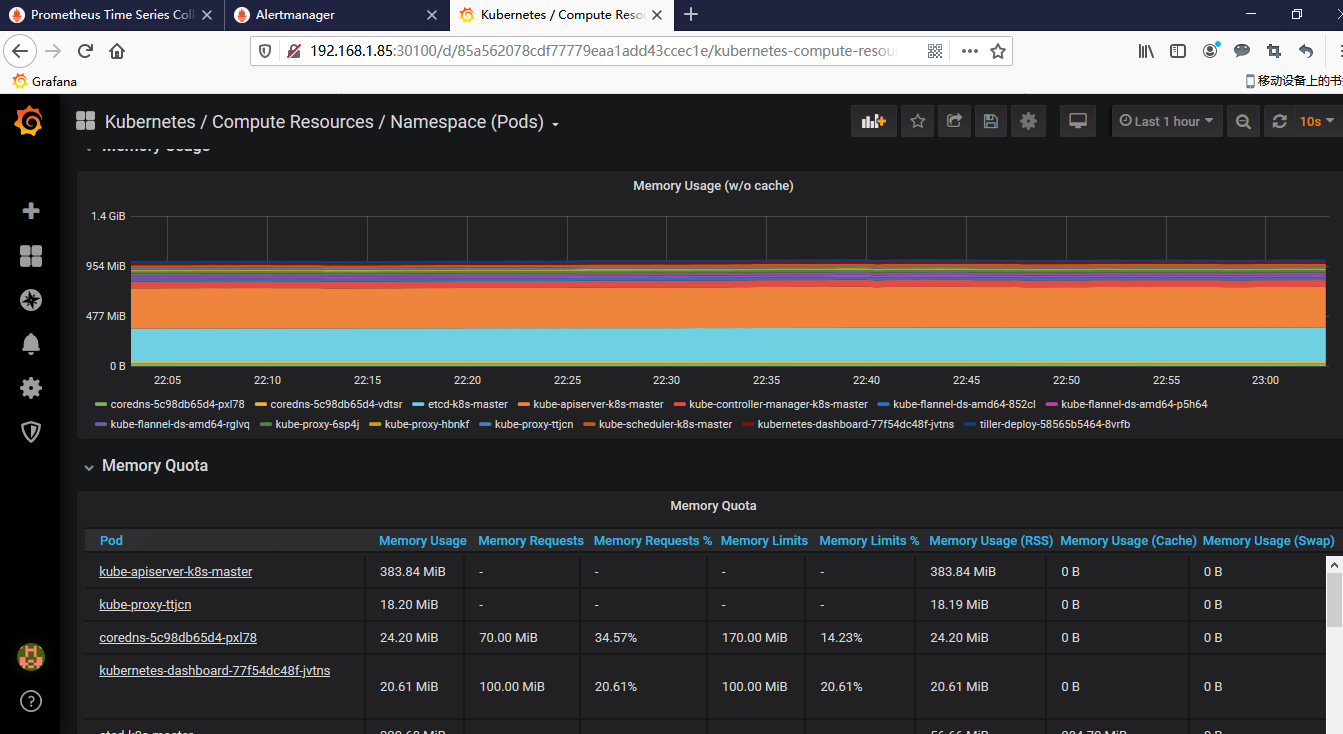

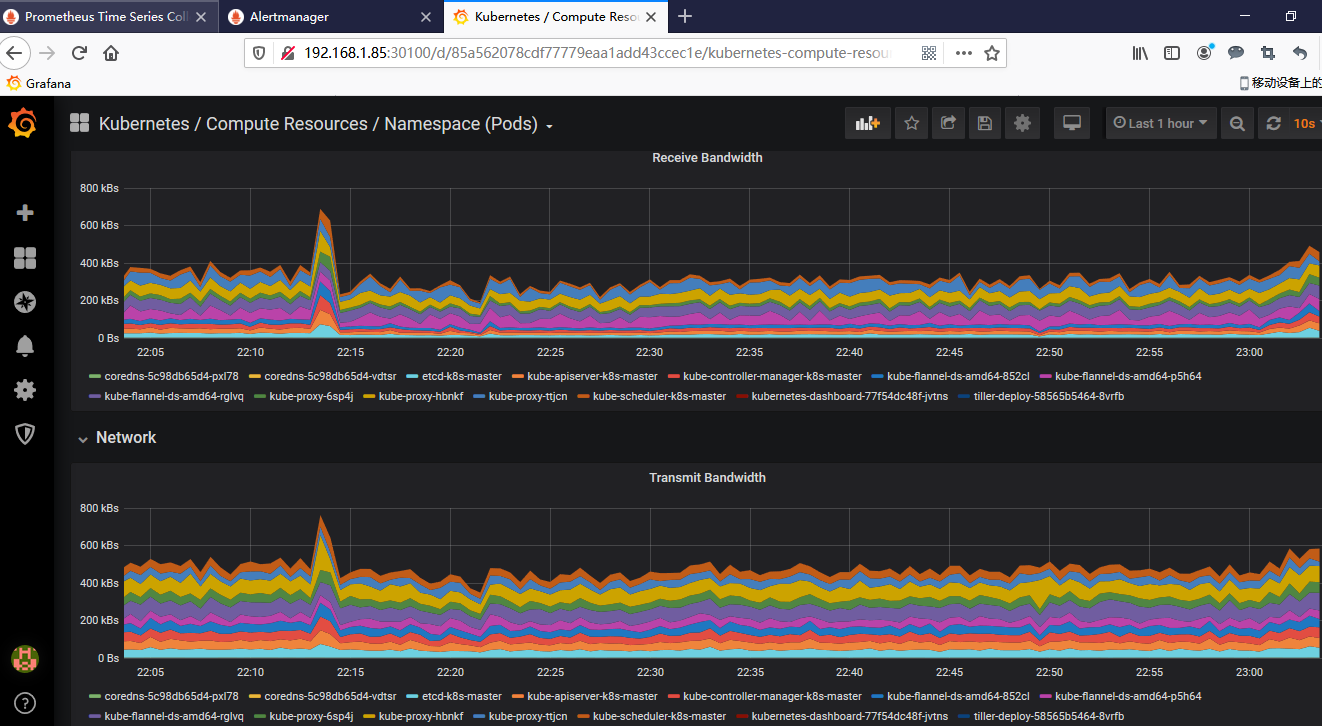

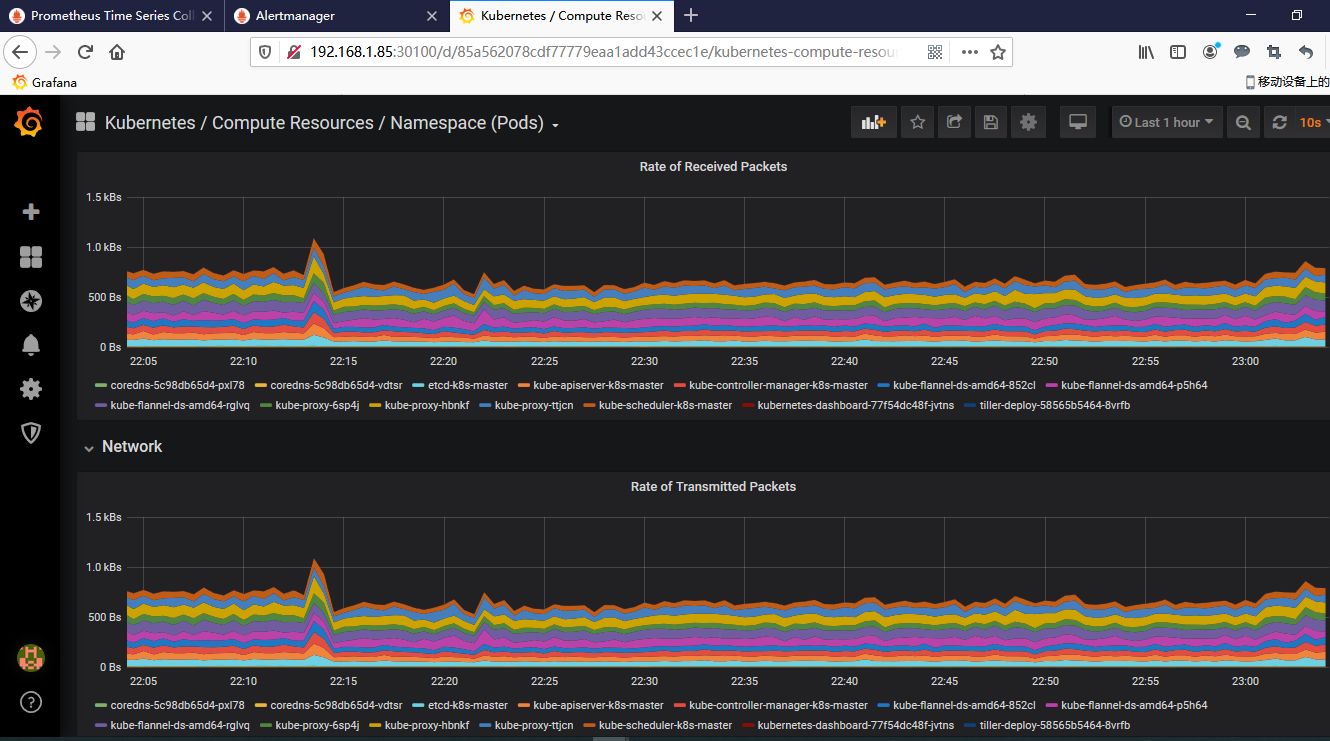

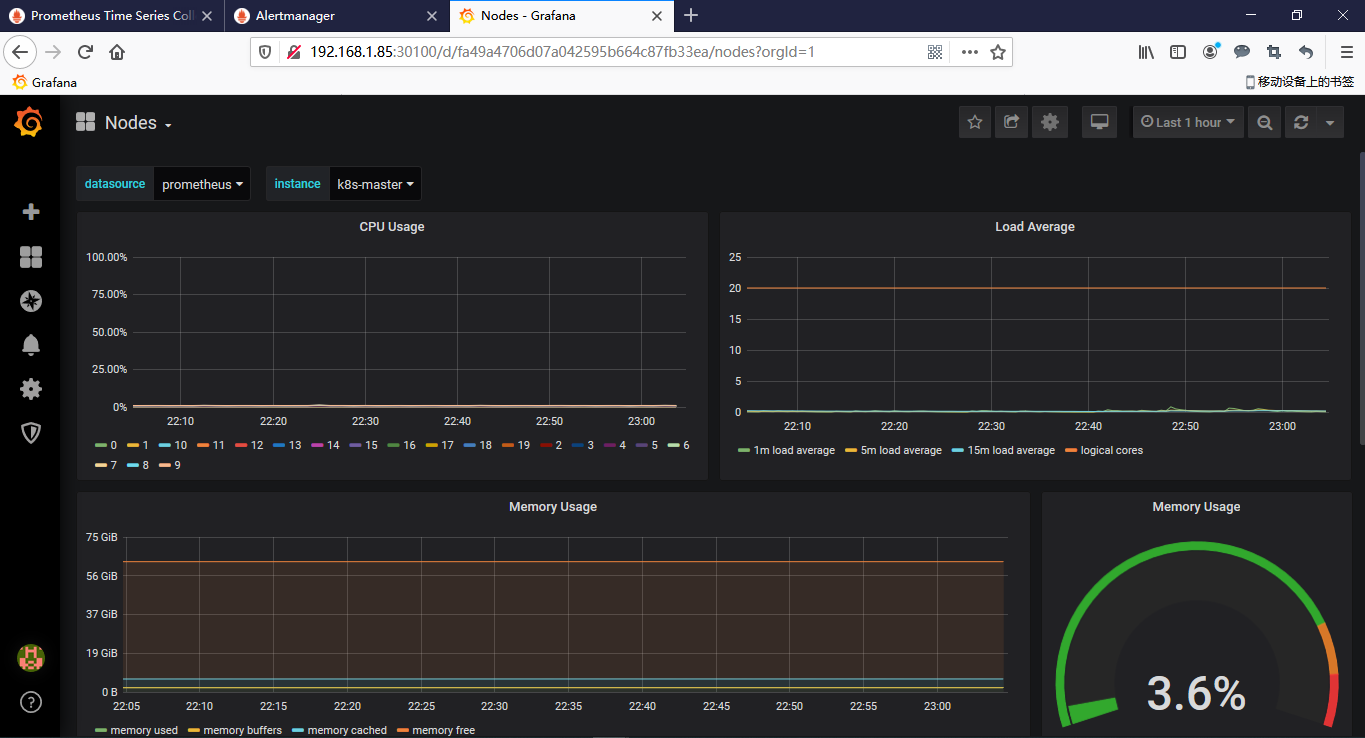

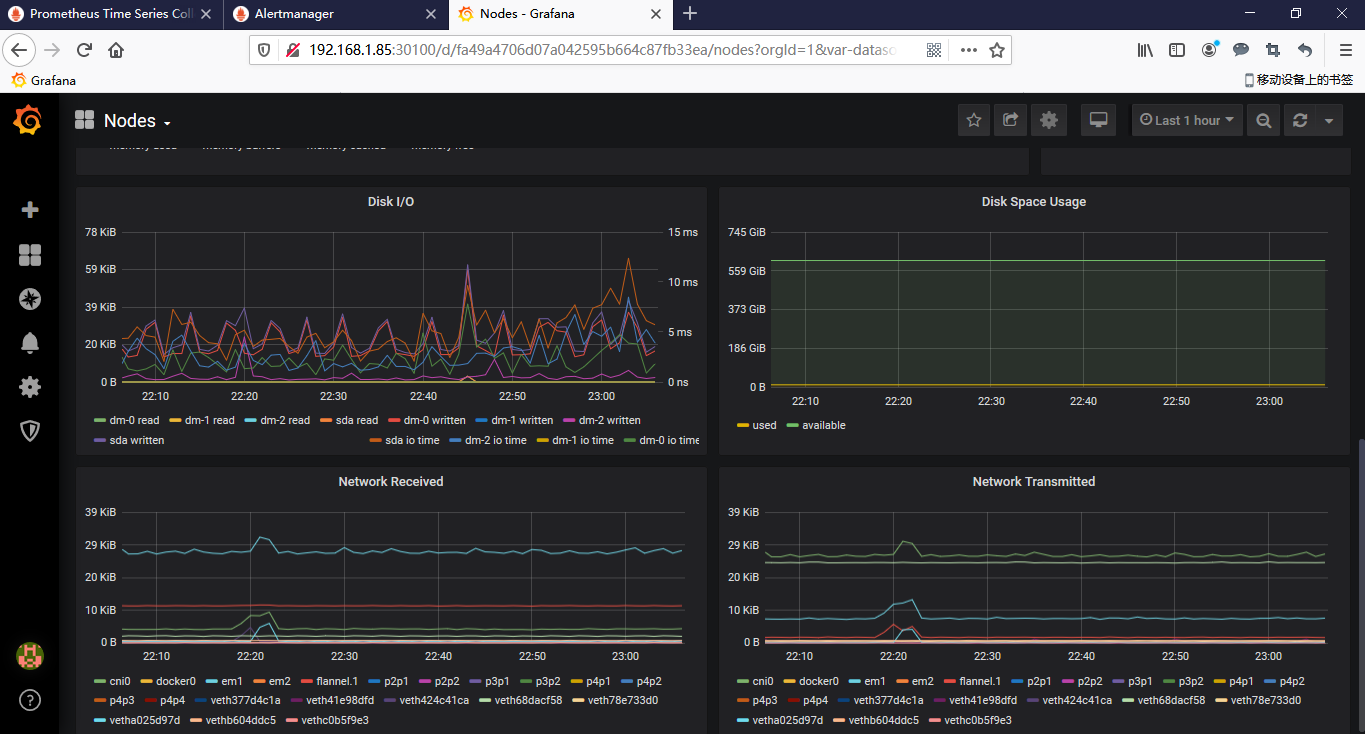

访问grafana

查看 grafana SVC服务暴露的端口号:

[root@k8s-master kube-prometheus]# kubectl get svc -n monitoring |grep grafana

grafana NodePort 10.96.206.146 3000:30100/TCP 44m

如上可以看到 grafana 对外的端口是30100,浏览器输入:http://k8s-master:30100 或 http://k8s-node1:30100 或者 http://k8s-node2:30100 用户名/密码: admin/admin